The rapid adoption of new generative artificial intelligence (GenAI) applications, led by ChatGPT, has sparked massive investments in dedicated, highly power-intensive, data-processing capacities. We estimate the installed data centre capacity for AI training will multiply from roughly 5GW worldwide today to more than 40GW by 2027, exceeding the annual power consumption of the UK. Strong efforts and comprehensive solutions will be required to address the enormous energy challenges from an environmental, security and cost perspective. As such, the Polar Capital Smart Energy Fund invests in leading technology and solutions providers in the areas of energy efficient AI data-processing chips, power and thermal management of AI servers, and data centres and low-power optical data transmission.

Powering AI’s multi-sector opportunity

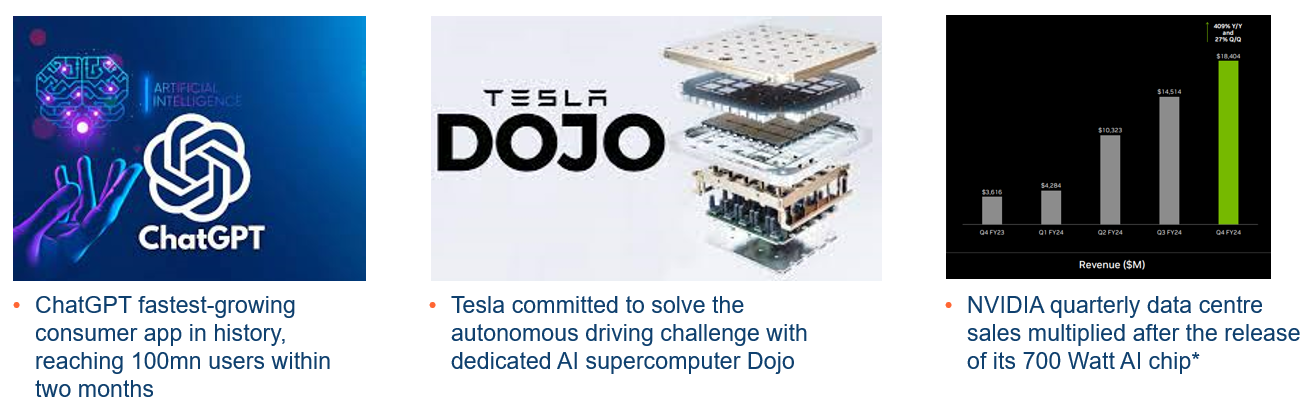

Launched in November 2022, ChatGPT became the fastest-growing consumer app in history, reaching 100 million users within two months. Other large language transformer model-based applications such as Google Gemini (previously Bard), Meta LLaMA, xAI Grok, Perplexity AI and Mistral AI in Europe soon followed. The training of large language models (LLMs) requires extensive computing performance – power-hungry graphics processing units (GPUs) in particular, which are designed for parallel processing, making them well-suited for AI training workloads. The prospect of supplying this power comes into sharp focus given AI is impacting a wide range of sectors from healthcare, education and finance to manufacturing. In the transportation sector, the world’s first AI supercomputer dedicated to finally solving the challenge of training autonomous driving came into operation in July 2023.

The installed data centre capacity we anticipate will require massive expansions in power generation and grid infrastructure (“you need transformers to run transformers”1) over the coming years. As the world’s leading AI infrastructure providers, including Alphabet, Amazon, Meta Platforms and Microsoft, are fully committed to science-based net-zero targets, renewable energy from solar and wind power is clearly prioritised for the build-up of the incremental power generation capacity. In fact, four of the largest technology companies, led by Amazon with 8.8GW, were among the top 10 corporate buyers of solar and wind energy through PPAs (power purchase agreements) in 20232.

Curbing AI’s power needs

The massive growth in AI data centre capacity and the related electricity consumption bring huge energy challenges from an environmental, security and cost perspective. Besides the provision of clean energy in the form of renewable power, including energy storage and transmission infrastructure, it will be essential to promote the energy efficiency of AI data centres (servers and data processors). In addition, government initiatives aim for higher energy efficiency in data centres. The European Union launched several instruments3 targeting the improvement of Power Utilisation Effectiveness (PUE; the ratio of the total amount of energy used by a data centre facility to the energy delivered to the IT equipment). PUE is at 1.6x today, with the ultimate goal close to 1x, where nearly all power is consumed by the IT equipment.

As companies attempt to address potential energy savings, we are finding attractive opportunities in custom chip developments as alternatives to general purpose GPUs, power and thermal management, namely next-generation power electronics, liquid cooling, and low-power optical data transmission.

Unlocking efficiency in AI workloads

Today’s AI processing in data centres is dominated by GPUs, which are well suited to handle the massive amount of computations due to their parallel processing architecture, with thousands of cores as well as high memory bandwidth. However, with the next generation of the leading AI GPU reaching 1000W peak power, alternatives to the general purpose GPUs could be more appealing.

One option is ASICs (application-specific integrated circuits), which are custom designed to perform an individual customer workload very efficiently. Each of the world’s leading AI companies has internally developed custom data processors optimised for its individual AI workloads (no one knows the individual workloads better than the AI companies themselves). This includes AI training chips as well as AI inference chips used in data centres and edge applications. Semiconductor companies, including intellectual property (IP) vendors and ASIC services providers, support the AI companies with IP and design of the custom chips. Manufacturing is outsourced to foundry partners using the most advanced energy-efficient process nodes. The current generation of the leading AI companies’ ASIC chips allow up to a 45% reduction in power consumption compared to general purpose GPUs4. While the penetration of custom AI ASICs today is still relatively low, the advantages in efficiency leading to lower energy consumption and related costs provide attractive growth opportunities for the enabling semiconductor companies in the Fund’s investment universe.

New materials enabling energy-efficient power supply

With the next generation of GPUs and tight latency requirements driving the power densities of AI server racks up 4x that of conventional servers, the efficiency of the power supply becomes increasingly important. Next-generation power semiconductor materials such as gallium nitride (GaN) and silicon carbide (SiC) cut power conversion losses by up to 70% compared to conventional silicon-based components5, helping to achieve power supply unit efficiencies of 97.5%6.

Rapid heat transfer through liquid cooling

Cooling is one of the largest sources of energy consumption in AI data centres. With conventional air cooling reaching its limitations in the face of the ever-increasing wattages of AI data processors and the related power density extremes of AI server racks, next-generation liquid cooling technologies, which have higher thermal transfer properties than air, offer a more efficient alternative. Liquid cooling can be deployed in various ways within a data centre, ranging from direct-to-chip cooling, rack rear-door cooling and next generation immersion cooling, where the entire printed circuit board with the electronic components is fully submerged into an electrically insulated liquid solution. Applying direct-to-chip liquid cooling technology to today’s leading AI training GPU cuts power consumption by 30% and rack space by 66%7. While only c5% of data centres are liquid cooled today8, the sharp rise in power density related to AI workloads will drive transition from air cooling to higher content liquid cooling thermal management solutions over the coming years. Our investment universe includes companies offering air/liquid cooling components and systems as well as thermal management infrastructure solutions for entire data centres.

Low-power optical data networks

The high-bandwidth and low-latency interconnection of thousands of GPUs at high speed in an AI data centre creates new challenges for data networks. This is particularly relevant for power consumption, which could amount to dozens of megawatts per AI cluster9. Hence, there is a growing need for energy efficient communication, replacing electric interconnects with optical fibre technologies, increasing the data transmission bandwidth significantly while strongly reducing transmission losses. Advanced modulation schemes further reduce power consumption by a factor of two. Higher levels of integration enabled by silicon photonics technology and linear drive optics promise further improvement in energy efficiency.

With AI functionality taking centre stage and seemingly only growing in both capability and corporate interest, addressing the enormous energy challenges to power AI applications is likely to be a much-needed, yet overlooked, component of the AI revolution. Managing an efficient approach to such a power-intensive technology means looking beyond the headline technology names and concentrating on the infrastructure that will allow them, and their rapidly accelerating scope for AI usage, to keep up with demand.

The Polar Capital Smart Energy Fund invests across the globe in companies that offer technologies aiming to tackle exactly that, including providers of power and thermal management solutions as well as optical data transmission and energy-efficient data processing technologies for AI data centres.

1. Quote from Elon Musk at the Bosch Connected World conference, 29 February 2024

2. BloombergNEF Trend Newsletter, “Amazon Is Top Green Energy Buyer in a Market Dominated by US”, 22 February 2024

3. The EU Code of Conduct for Data Centres – towards more innovative, sustainable and secure data centre facilities, 5 September 2023

4. Norman P. Jouppi, George Kurian, Sheng Li, Peter Ma, Rahul Nagarajan, Lifeng Nai, Nishant Patil, Suvinay Subramanian, Andy Swing, Brian Towles, Cliff Young, Xiang Zhou, Zongwei Zhou, David Patterson. 2023. TPU v4: An Optically Reconfigurable Supercomputer for Machine Learning with Hardware Support for Embeddings: Industrial Product. In The 50th Annual International Symposium on Computer Architecture (ISCA ’23), June 17–21, 2023, Orlando, FL, USA. ACM, New York, NY, USA, 14 pages. https://doi.org/10.1145/3579371.3589350.

5. Rohm Semiconductor https://www.rohm.de/sic/sic-mosfet

6. Delta Electronics, https://www.deltaww.com/ja-jp/news/AI-Server-power

7. NVIDIA Computex 2022 Liquid Cooling HGX H100 & H100 PCIe

8. nVent, 12 April 2023, “Driving Innovation in Precision Liquid Cooling Through our Iceotope Collaboration”, https://www.nvent.com/en-us/resources/news/driving-innovation-precision-liquid-cooling-through-our-iceotope-collaboration

9. Meta, Open Compute Project (OCP) summit, 17-19 October 2023, San Jose